The rise of autonomous vehicles (AVs) promises to revolutionize transportation by offering increased safety, convenience, and efficiency. However, as these self-driving cars become more advanced and ready for mass adoption, they also present significant legal and ethical challenges that need to be addressed. The transition to a world where cars can drive themselves introduces complex issues related to liability, privacy, safety, and decision-making, all of which require careful thought and regulation.

One of the primary legal concerns surrounding autonomous vehicles is determining liability in the event of an accident. In traditional human-driven vehicles, if a driver is at fault, they are responsible for the damages, and insurance can help compensate for losses. But with AVs, the question becomes: who is liable when the car is in control? Is it the manufacturer of the vehicle, the software developer responsible for the self-driving algorithms, or the car owner who may or may not have been actively monitoring the vehicle? In cases of malfunction or system failure, responsibility becomes even more complicated.

As AVs become more prevalent, governments and insurance companies will need to develop new frameworks for assigning responsibility in the event of accidents. Some propose that manufacturers should be held liable for accidents caused by software or hardware malfunctions, while others believe that insurance should be structured in a way that assigns responsibility to the parties involved. Additionally, legislation may need to be enacted to address specific liability issues related to AVs, with a focus on fairness and transparency.

Ethical dilemmas also play a central role in the development of autonomous vehicles. One of the most frequently discussed ethical concerns is the “trolley problem,” a thought experiment in which a vehicle must make a decision between two harmful outcomes: to swerve and hit a group of people, or to continue forward and harm one person. How should an autonomous vehicle be programmed to make such life-or-death decisions? Should the car prioritize the safety of its passengers, pedestrians, or a combination of both? These ethical decisions are not just theoretical; they will have real-world consequences when autonomous vehicles are deployed on public roads.

In response to these concerns, researchers and ethicists are working to define ethical guidelines for autonomous vehicle behavior. The challenge is to find consensus on what values and principles should guide these decisions. Some advocate for utilitarian approaches, which seek to minimize overall harm by choosing the option that benefits the most people. Others argue that AVs should prioritize protecting human life above all else, regardless of the number of lives at risk. Finding a balance between these perspectives will require input from policymakers, ethicists, engineers, and the public to ensure that AVs behave in a way that aligns with societal values.

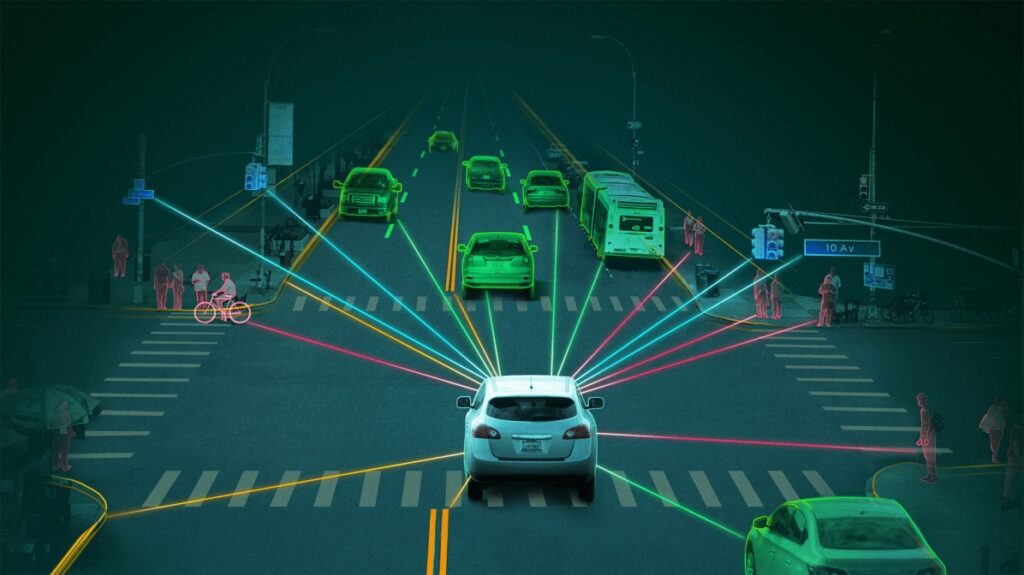

Privacy is another key issue in the realm of autonomous vehicles. Self-driving cars are equipped with a range of sensors, cameras, and GPS tracking systems to navigate their environment. This data can be valuable for improving vehicle performance and safety, but it also raises concerns about surveillance and data security. How much data should autonomous vehicles collect, and who owns that data? Can this data be used for purposes beyond improving the vehicle, such as tracking drivers’ locations or analyzing driving habits for commercial gain?

To address privacy concerns, regulations around data collection and usage need to be put in place. AV manufacturers and developers must be transparent about the data they collect, how it is stored, and who has access to it. Consumers should have the option to control the data generated by their vehicles and should be able to trust that their personal information is protected from misuse. Strong data protection laws and ethical standards will be crucial in maintaining public trust in autonomous vehicle technology.

There are also significant regulatory hurdles that need to be overcome before AVs can be widely deployed. Governments will need to update road safety laws, traffic regulations, and vehicle inspection standards to account for the unique capabilities and limitations of self-driving cars. This includes addressing issues such as lane positioning, traffic signal recognition, and pedestrian interaction. Different countries and regions may approach these regulations differently, creating challenges for manufacturers that wish to deploy AVs globally.

The road to full integration of autonomous vehicles into society will require collaboration between various stakeholders, including governments, manufacturers, consumers, and legal experts. While technology is advancing rapidly, the legal and ethical frameworks needed to support it are still in development. As AVs move from concept to reality, it will be important to balance innovation with safety, fairness, and respect for individual rights.

As we approach a future with autonomous vehicles, these challenges will need to be tackled thoughtfully and comprehensively. The outcome of these debates will shape not only how AVs function on the roads but also how society as a whole integrates this transformative technology. The evolution of AVs presents an opportunity to rethink traditional concepts of mobility and safety, but it also demands careful attention to the legal and ethical issues that will accompany these changes.

From Our Editorial Team

Our Editorial team comprises of over 15 highly motivated bunch of individuals, who work tirelessly to get the most sought after curated content for our subscribers.